Zakhar Brain Service

Yesterday I merged a big software update to the Zakhar’s Raspberry Pi Unit - brain_service.

The update brings a service providing the robot’s status (network, OS status) and access to the CAN bus for many simultaneously connected clients. Also, the service tracks the presence of other robot Units on the CAN bus.

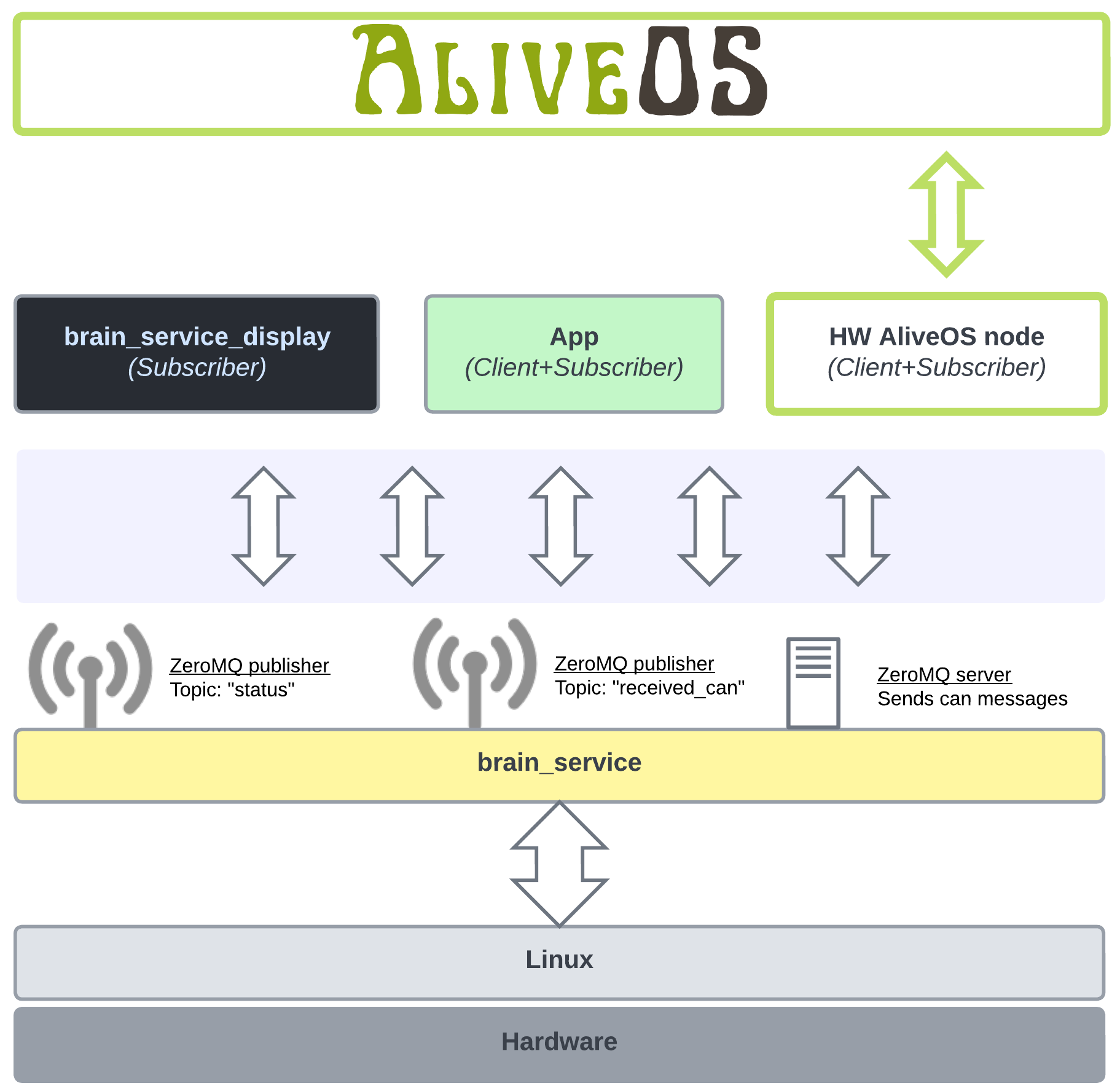

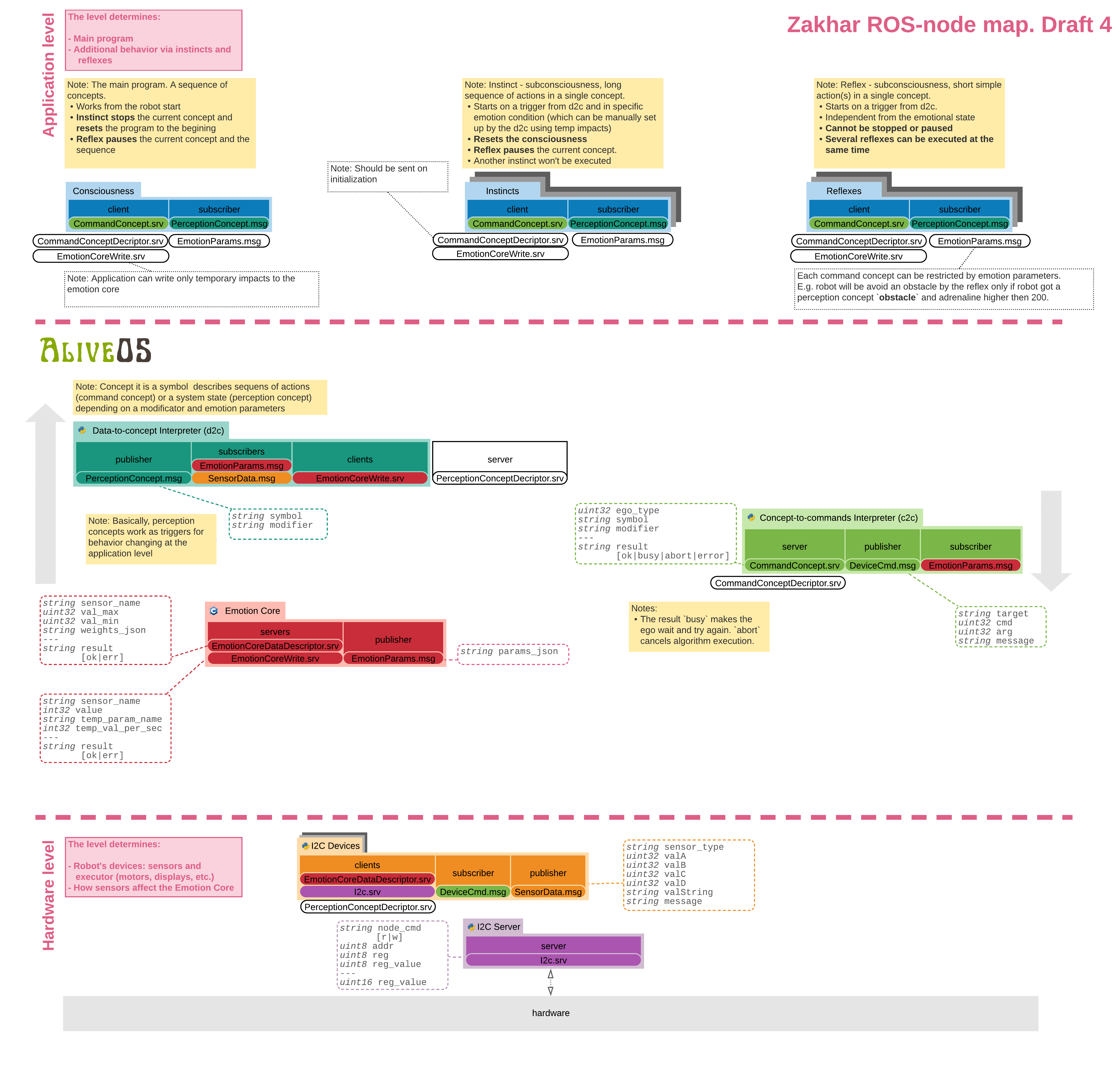

Brain Software Architecture

Before updating Alive OS to support qCAN (my CANbus-based protocol) I have the last thing to do. To simplify my live in future I need a CAN publisher that can publish messages to many subscribers. My main subscribe of course is AliveOS but also to display information about connected devices I need a second subscriber - a service listening only qCAN Present messages.

To do it I will use a ZeroMQ protocol - an extremely supported and documented for many programming languages standard. I’m going to update my brain_service to support the protocol and it will be responsible for all interaction with Raspberry Pi.

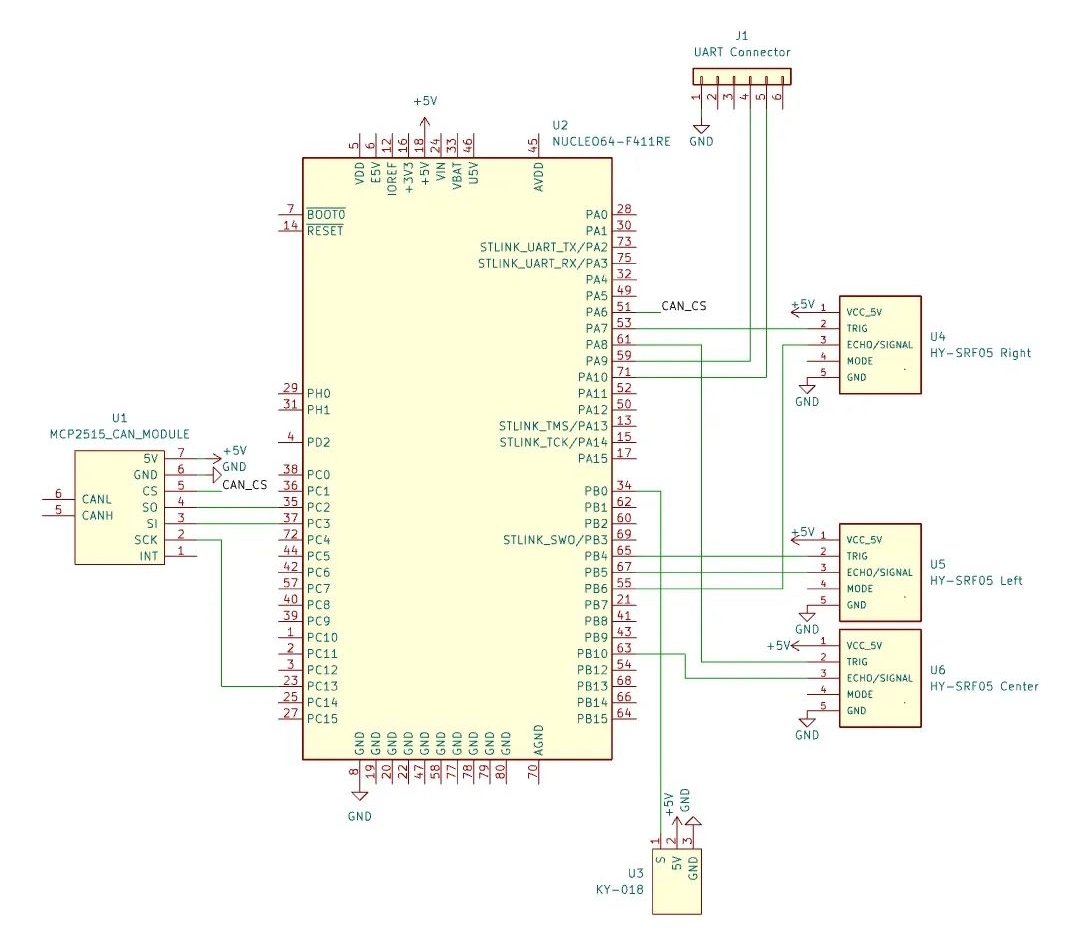

Sensor Unit with qCAN Support is Merged!

Updated code for the Sensor Unit with qCAN support is merged and documented!

- Source code: https://github.com/Zakhar-the-Robot/io_sensors

- Documentation: https://zakhar-the-robot.github.io/doc/docs/systems/io_sensors/

The Wheeled Platform with CAN support is merged!

A new step in Zakhar global transition to CAN bus is done!

CAN Bus and a New Simple Protocol

Not Only Two Wires

It’s been more than two years already since I started working on my robot Zakhar. The Zakhar 1 was built out of Lego and relied on I2C communication between modules. It was a nightmare because as it turned out each MCU developer has its own understanding of how a developer should interact with the I2C unit. What I wanted from the interface: